In the past two decades, thanks in part to new technologies such as fNMI, PET, and MEG, science’s understanding of how the brain works, learns, and solves problems has increased dramatically. This new knowledge can be of great assistance to instructors and students — after it is translated out of its technical terminology and mined for import to the classroom.

Thankfully, someone has done much of this work — with the aim of helping educators.

Since 2002, University of Virginia cognitive scientist Daniel Willingham has sifted the gold standard, controlled variable, peer reviewed scientific research (as opposed to less rigorous “educational” research). In jargon-free columns in the American Educator, a quarterly professional journal (with free online access provided by the American Federation of Teachers), Willingham shares the nuggets most useful to instructors.

What science has discovered is different in some areas from what has been claimed by various educational philosophies. Frankly, the science is not necessarily what we wanted to hear. A summary might be: “Evolution has given humans a brain designed to help children learn a language naturally, but other learning is hard work.”

The good news is that science has identified what does work to make study and instruction more effective and efficient. Willingham presents both the findings and the science that supports them. Most of his examples reference K-12 instruction, but for college-level and high school instructors, combining Willingham’s research summaries with the practical tips in the book Make It Stick (see the Read Recs tab above) provides a marvelous summary of the new scientific consensus on learning.

A full listing of Willingham’s articles is available at http://www.aft.org/newspubs/periodicals/ae/authors5.cfm

Of the 26 columns published to date, below are brief summaries of 9 that I believe are especially relevant to chemistry. Trust me: the summaries below do not do the articles justice. The hope is to entice you to clink the link to the column and explore topics of interest.

To focus on a “science-instruction” perspective, I have “re-titled” the questions addressed by each article as follows:

- Making Learning Enjoyable

- Why Knowledge In Memory Is Important

- The Value of Spaced Study – and Frequent Quizzes

- Helping Students Build Effective Study Habits

- Why Learning Is Concrete First, then Conceptual

- How To Help Students Construct Memory and Understanding

- Why Practice Beyond Mastery Is Necessary

- Can Critical Thinking Be Taught?

- Teaching and Learning Factual, Procedural, and Conceptual Knowledge

I’d suggest: pick a numbered topic above, check the summary below, then try a column or two. For topics in addition to what is in the articles, see Willingham’s book: Why Don’t Students Like School? available in paperback for under $12.

Article Summaries:

- Making Learning Enjoyable

The article is Why Don’t Students Like School? at:

https://www.aft.org/sites/default/files/periodicals/WILLINGHAM%282%29.pdf

Willingham diagrams the interaction of working and long-term memory. In a human brain which evolved to support the “fluent remembering” required for speech, successful problem solving favors remembering how a similar problem was solved in the past. One welcome finding is that “solving problems brings pleasure” if a problem somewhat challenging but solvable with guidance.

In the age of the internet and calculators, why is it important to memorize? Being a scientist, most of Willingham’s descriptions of research are carefully qualified, so his response here is noteworthy:

“Data from the last 30 years lead to a conclusion that is not scientifically challengeable: thinking well requires knowing facts, and that’s true not simply because you need something to think about. The very processes that teachers care about most—critical thinking processes like reasoning and problem solving—are intimately intertwined with factual knowledge that is in long-term memory (not just in the environment).”

He also cites recent studies indicating “that children do differ in intelligence, but intelligence can be changed through sustained hard work.”

- Why Knowledge In Memory Is Important

The article How Knowledge Helps: It Speeds and Strengthens Reading Comprehension, Learning—and Thinking is at: http://www.aft.org/newspubs/periodicals/ae/spring2006/willingham.cfm

In learning, Willingham documents the Matthew Effect: “Those with a rich base of factual knowledge find it easier to learn more—the rich get richer.” When listening or reading, being able to fluently recall knowledge in memory helps in making inferences that improve comprehension. In addition, when students have more background knowledge, space is more likely to be available in working memory to identify and process for long-term memory the conceptual implications: “Whereas novices focus on the surface features of a problem, those with more knowledge focus on the underlying structure of a problem.”

Also noted are studies in science education showing that, to improve students’ problem solving abilities, teaching “problem-solving strategies” was less effective than “improving students’ knowledge base.”

- The Value of Spaced Study – and Frequent Quizzes.

The column is Allocating Student Study Time: “Massed” versus “Distributed” Practice at: http://www.aft.org/newspubs/periodicals/ae/summer2002/willingham.cfm

Is “cramming” a smart way to study? In research comparing students who studied for 8 sessions in one day to those who studied in 2 sessions a day for 4 days, those who spaced their practice were able to remember more than twice as much on a quiz a week later. Other studies showed positive effects of spaced practice on vocabulary retention on tests eight years later.

Willingham suggests:

- Let students know how “spaced practice” will help on final exams and in recalling information when it is needed in future courses.

- To encourage spaced study, schedule frequent graded quizzes that require recall from memory.

- Space topics to include a review of early fundamentals at later points in courses.

- Helping Students Build Effective Study Habits

The article is What Will Improve a Student’s Memory? at https://www.aft.org/sites/default/files/willingham_0.pdf

What science-based advice can we offer students on how to study? Willingham reviews

- How memory is the residue of thought: you remember what you think about.

- How to focus on thought about meaning.

- Identifying and remembering what is important during self-study.

- Learning “distinctive cues” that assist in memory retrieval.

- Why re-copying notes and highlighting is less efficient than “self-quizzing.”

- The value of mnemonics and visual imagery.

- The need to “overlearn” fundamentals.

The book Make It Stick by Brown, Roediger, and McDaniel (see the Read Recs tab) does a more detailed review of study strategies such as self-quizzing (including flashcards), summary sheets, interleaved practice, and elaboration, but Willingham’s explanation of the cognitive principles sets the stage for understanding why those strategies work.

- Why Learning Is “Concrete First,” then Concept

Inflexible Knowledge: The First Step to Expertise is at: http://www.aft.org/newspubs/periodicals/ae/winter2002/willingham.cfm

Willingham notes that much of what is deprecated as “rote learning” is actually “inflexible knowledge:” knowledge with a narrow meaning that is not tied to a deeper conceptual structure. While organizing knowledge by its deeper structure is the goal in learning, he cites extensive evidence that during initial moving of information into memory, “the mind much prefers that new ideas be framed in concrete rather than abstract terms.”

His advice for teachers?

“If we minimize the learning of facts out of fear that they will be absorbed as rote knowledge, we are truly throwing the baby out with the bath water…. What turns the inflexible knowledge of a beginning student into the flexible knowledge of an expert seems to be a lot more knowledge, more examples, and more practice.”

- Helping Students Construct Memory and Understanding

Students Remember … What They Think About is at: http://www.aft.org/newspubs/periodicals/ae/summer2003/willingham.cfm

How do we move students from “shallow” learning of facts to seeing the deeper structure that conceptually organizes facts and procedures? The brain tends to remember what it thinks about and elements of the context in which content is encountered. That context is a key to meaning, and the context is tagged to the knowledge in memory if the context is also thought about.

One consequence of this rule is that while students are more likely to remember what they “discover,” such learning must be done carefully. ”Students will remember incorrect ‘discoveries’ just as well as correct ones.”

Willingham describes how “study guides” for text assignments can be especially helpful if questions steer students toward linkages that construct deeper understanding.

- Why Practice Beyond Mastery Is Necessary

The article is Practice Makes Perfect—but Only If You Practice Beyond the Point of Perfection at: http://www.aft.org/newspubs/periodicals/ae/spring2004/willingham.cfm

In order to minimize forgetting what has been learned, cognitive studies recommend spaced overlearning: practice to perfection in recalling new content, repeated over multiple days. “Regular, ongoing review … past the point of mastery” is necessary to gain expertise.

Willingham notes that experts generally attribute their success not to talent, but to extensive practice in a domain, and that experts generally must practice extensively “for at least 10 years” before they make substantive contributions to their field.

In introductory courses, what knowledge and skills are most important to practice? “Core skills and knowledge that will be used again and again.”

- Can Critical Thinking Be Taught?

The article Critical Thinking: Why Is It So Hard To Teach is at https://www.readingrockets.org/article/critical-thinking-why-it-so-hard-teach

Willingham’s summary: “Can critical thinking actually be taught? Decades of cognitive research point to a disappointing answer: not really.”

He explains that critical thinking is not, for the most part, a generalized skill. Though students can and should be taught to “look at an issue from multiple perspectives,” or “estimate to check a calculator answer,” to do so requires content knowledge that can be recalled from memory. Most critical thinking strategies are “domain specific:” different, for example, between Pchem and organic synthesis. Though critical thinking strategies can be taught, they nearly always can be explained quickly. In learning, the slow, rate determining step is moving new information into long-term memory, and, via practice, tagging the information with meaning.

In a final section, he describes why scientific thinking in particular depends on scientific knowledge in memory.

(An 2020 update on this issue by Willingham is at https://www.aft.org/ae/fall2020/willingham )

- Teaching and Learning Factual, Procedural, and Conceptual Knowledge

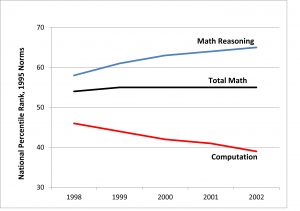

Is It True That Some People Just Can’t Do Math? is at https://www.aft.org/sites/default/files/willingham.pdf

Despite the title, this article contains an excellent review of how to structure instruction in both math and the physical sciences. After a few math-specific topics, starting on article page 3, Willingham discusses how math and science instructors can help students learn factual, procedural, and conceptual knowledge.

To start: students need fluent recall of fundamentals. Why? “Complex problems have simpler problems embedded in them…. Students who automatically retrieve the answers to simple… problems keep their working memory (the mental space in which thought occurs) free to focus on the bigger… problem.”

Willingham cites the value of illustrating concepts with plenty of familiar concrete examples when they are available, and familiar analogies when they are not. On the question of what is more important learning: facts, procedures, or concepts? Studies say they are intertwined and reinforce each other.

* * * * *

Were any of these articles controversial? Surprising? Feel free to express your views in the Comments below.

# # # # #